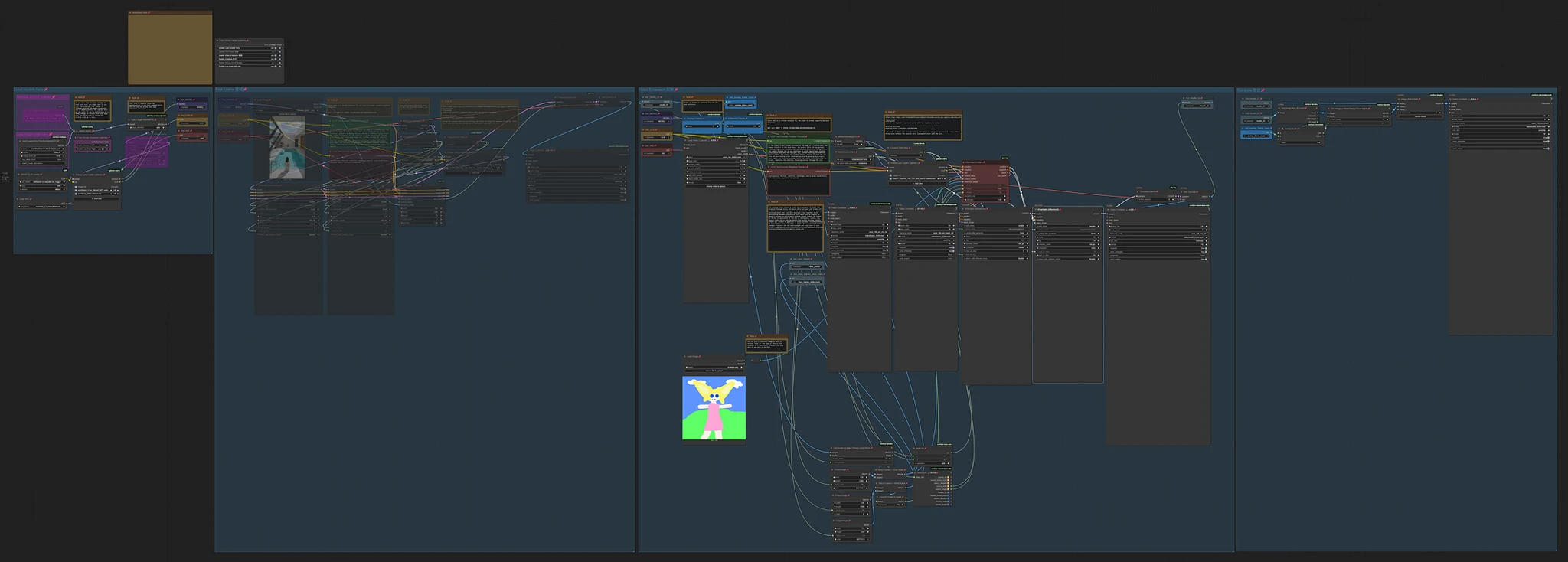

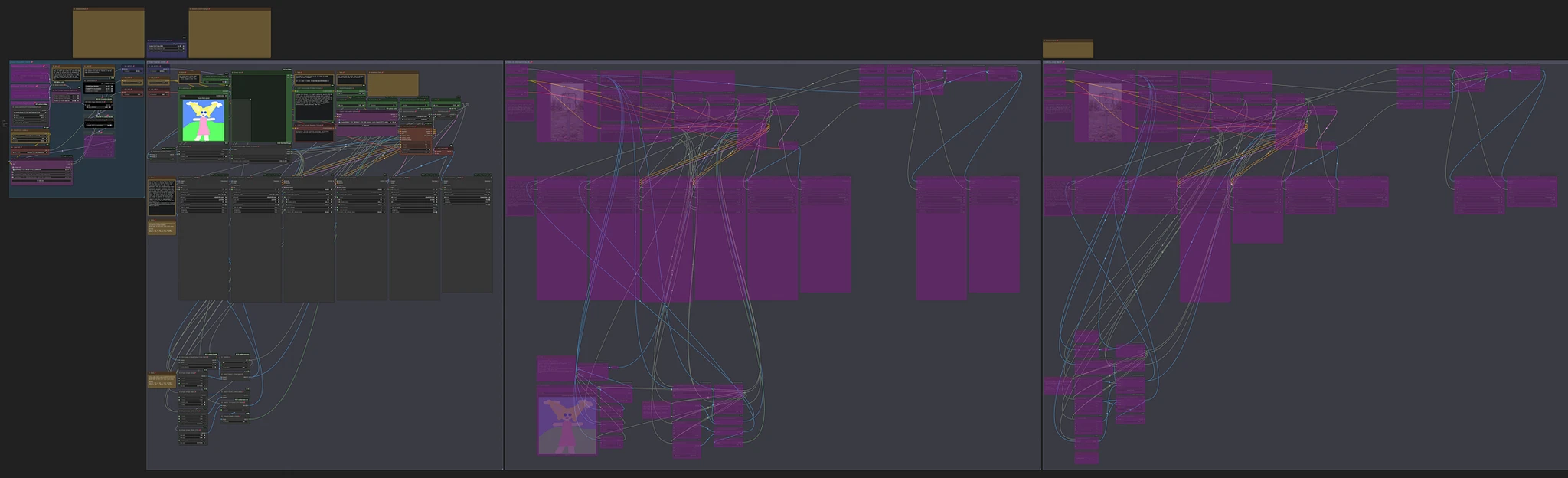

MoviiGen VACE GGUF + CausVid LoRA (14B 720P I2V & Video Extension)

This experimental ComfyUI workflow supports:

Image-to-Video (I2V) and Video Extension (V2V) generation using MoviiGen1.1-VACE-GGUF

Using CausVid LoRA with 2 samplers for faster generations

You can:

Generate the first video as your starting point

Extend the video one at a time to gradually build out the full sequence

Cherry-pick the best segments for your final cut

Refine prompts step-by-step as the scene or motion evolves

🔧 Components

🌀 MoviiGen1.1-VACE-GGUF (by Finanzamt_Endgegner)

Based on Wan2.1, fine-tuned on cinematic 720p+ videos

VACE (All-in-One Video Creation and Editing framework) allows motion control using reference videos (like ControlNet for video)

Native support in ComfyUI via GGUF format

-

Temporal consistency across the full sequence

Model Downloads:

Official Repo:

Related:

⚡ CausVid LoRA (by Kijai)

Speeds up Wan2.1-based video workflows

-

Works best with two samplers (based on Maraan666):

First few steps: without CausVid LoRA

Remaining steps: with CausVid LoRA

Improves motion without suppressing natural movement

LoRA Downloads:

14B: Download

Source: lightx2v/Wan2.1-T2V-14B-CausVid1.3B: Download

Source: tianweiy/CausVid - Bidirectional Checkpoint 2

Official Repo:

Related:

CivitAI: Causvid Lora, massive speedup for Wan2.1 made by Kijai

Reddit: Causvid Lora, massive speedup for Wan2.1 made by Kijai

▶️ How to Use

🖼️ To Generate Video from an Image as First Frame

Enable "First Frame" from the muter node

Upload your input image

-

Set generation parameters:

Prompts (positive/negative)

Shift

Steps

Seed

Width / Height

Length (frame count)

Sampler

Scheduler

Click Run

🎥 To Extend an Existing Video

Enable "Video Extension" and "Combine" options

Upload your input video

-

Set extension parameters:

Overlap Frame Count

Extension Frame Count

Prompts (positive/negative)

Shift

Steps

Seed

Sampler

Scheduler

Click Run

⚠️ Challenges and Limitations

The base model is a T2V model, not a true I2V model.

The I2V is achieved by feeding a reference image into the VACE node, rather than directly preserving the image.

An I2V model typically keeps the input image as the exact first frame.

Here, VACE treats the image as loose guidance, not strict visual preservation

Examples:

If your source image lacks an object, but your prompt includes it, that object might be added to the first frame.

If the prompt contradicts the image, some original elements may be missing.

Fine details may degrade over time, especially in extended video generations.

📂 References

FAQ (Frequently Asked Questions)

❓ Can I run this with 16GB VRAM?

Yes. I ran it on an RTX 5060 Ti with 16GB VRAM using the Q6_K GGUF model.

With GGUF models, you can choose a version that fits your GPU memory:

Q3_X_X (3-bit) for ~8GB VRAM

Q4_X_X (4-bit) for ~12GB

Q5–Q6 for ~16GB

Q8 for ~24GB+

👉 Model & hardware info: https://huggingface.co/QuantStack/MoviiGen1.1-VACE-GGUF

❓ Why do I get errors or bad video clips?

This workflow is still experimental, so crashes or poor results are common. Here are some tips:

OOM (out of memory) error = your GPU doesn’t have enough VRAM

Use a lower quant model (e.g. Q3 or Q4) to reduce memory usage

Lower the video resolution or clip length to avoid overload

If transitions look bad, try adjusting the prompt or other settings

Generate multiple times, then pick the best clips to stitch together

❓ Why does "WanVaceToVideo" give an error with certain resolutions?

The "WanVaceToVideo" model only accepts resolutions where both width and height are divisible by 16. If your input resolution doesn’t meet this requirement, you’ll likely run into errors or processing failures.

Below are safe resolutions for commonly used aspect ratios, based on standard output heights (320, 368, 480, 544, 640, 720):

✅ Recommended Aspect Ratios & Resolutions (All values divisible by 16)

🖥 32:9 -> 1136x320

📽 21:9 -> 752x320, 864×368, 1120×480, 1264×544

🖼 2:1 -> 640x320, 736×368, 960×480, 1088×544, 1280×640

📺 16:9 -> 576x320, 656×368, 848×480, 960×544, 1136×640, 1280×720

🖥 16:10 -> 512x320, 592×368, 768×480, 864×544, 1024×640, 1152×720

📷 3:2 -> 480x320, 560×368, 720×480, 816×544, 960×640, 1088×720

🖼 4:3 -> 432x320, 496×368, 640×480, 720×544, 848×640, 960×720

🖼 5:4 -> 400x320, 464×368, 608×480, 688×544, 800×640, 896×720

Popularity

Info

Latest version (v1.0.0): 1 File

7 Versions

Go ahead and upload yours!