Originally posted to HuggingFace by nitrosocke

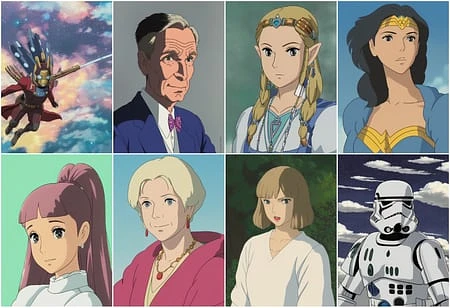

This is the fine-tuned Stable Diffusion model trained on images from modern anime feature films from Studio Ghibli. Use the tokens ghibli style in your prompts for the effect.

If you enjoy my work and want to test new models before release, please consider supporting me

Characters rendered with the model: Cars and Animals rendered with the model: Landscapes rendered with the model: ghibli style beautiful Caribbean beach tropical (sunset) - Negative prompt: soft blurry ghibli style ice field white mountains ((northern lights)) starry sky low horizon - Negative prompt: soft blurry

Prompt and settings for the Strom Trooper:ghibli style (storm trooper) Negative prompt: (bad anatomy) Steps: 20, Sampler: DPM++ 2M Karras, CFG scale: 7, Seed: 3450349066, Size: 512x704

Prompt and settings for the VW Beetle:ghibli style VW beetle Negative prompt: soft blurry Steps: 30, Sampler: Euler a, CFG scale: 7, Seed: 1529856912, Size: 704x512

This model was trained using the diffusers based dreambooth training by ShivamShrirao using prior-preservation loss and the train-text-encoder flag in 15.000 steps.

What is Ghibli Diffusion?

Ghibli Diffusion is a highly specialized Image generation AI Model of type Safetensors / Checkpoint AI Model created by AI community user civitai. Derived from the powerful Stable Diffusion (SD 1.5) model, Ghibli Diffusion has undergone an extensive fine-tuning process, leveraging the power of a dataset consisting of images generated by other AI models or user-contributed data. This fine-tuning process ensures that Ghibli Diffusion is capable of generating images that are highly relevant to the specific use-cases it was designed for, such as .

With a rating of 4.75 and over 4 ratings, Ghibli Diffusion is a popular choice among users for generating high-quality images from text prompts.

Can I download Ghibli Diffusion?

Yes! You can download the latest version of Ghibli Diffusion from here.

How to use Ghibli Diffusion?

To use Ghibli Diffusion, download the model checkpoint file and set up an UI for running Stable Diffusion models (for example, AUTOMATIC1111). Then, provide the model with a detailed text prompt to generate an image. Experiment with different prompts and settings to achieve the desired results. If this sounds a bit complicated, check out our initial guide to Stable Diffusion – it might be of help. And if you really want to dive deep into AI image generation and understand how set up AUTOMATIC1111 to use Safetensors / Checkpoint AI Models like Ghibli Diffusion, check out our crash course in AI image generation.

Popularity

Info

Latest version (V1): 1 File

1 Version

Go ahead and upload yours!