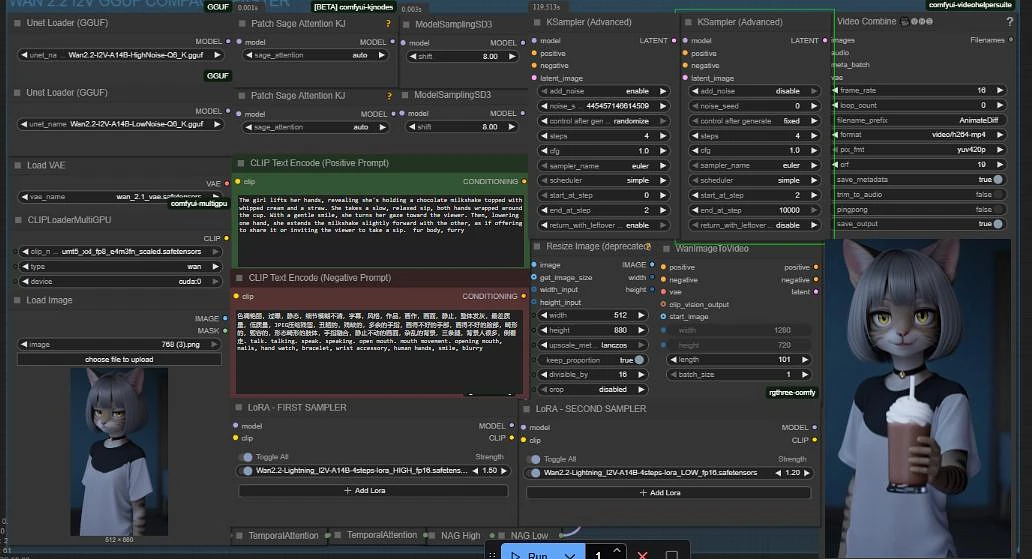

I made a compact WF for WAN 2.2 GGUF — you can bypass the GGUF models and add base models like FP8.

Links:

Lora Lightx2v: https://huggingface.co/Kijai/WanVideo_comfy/tree/main/Lightx2v

GGUF: https://huggingface.co/QuantStack/Wan2.2-I2V-A14B-GGUF/tree/main

or https://huggingface.co/bullerwins/Wan2.2-I2V-A14B-GGUF/tree/main

You need a High model and a Low model with the same quantization. The VAE is the same as WAN 2.1.

Make sure to update your ComfyUI.

Popularity

Info

Latest version (v1.2 + Lora lightning 2.2): 1 File

About this version: v1.2 + Lora lightning 2.2

new acceleration loras with their respective adjustments

3 Versions

Go ahead and upload yours!