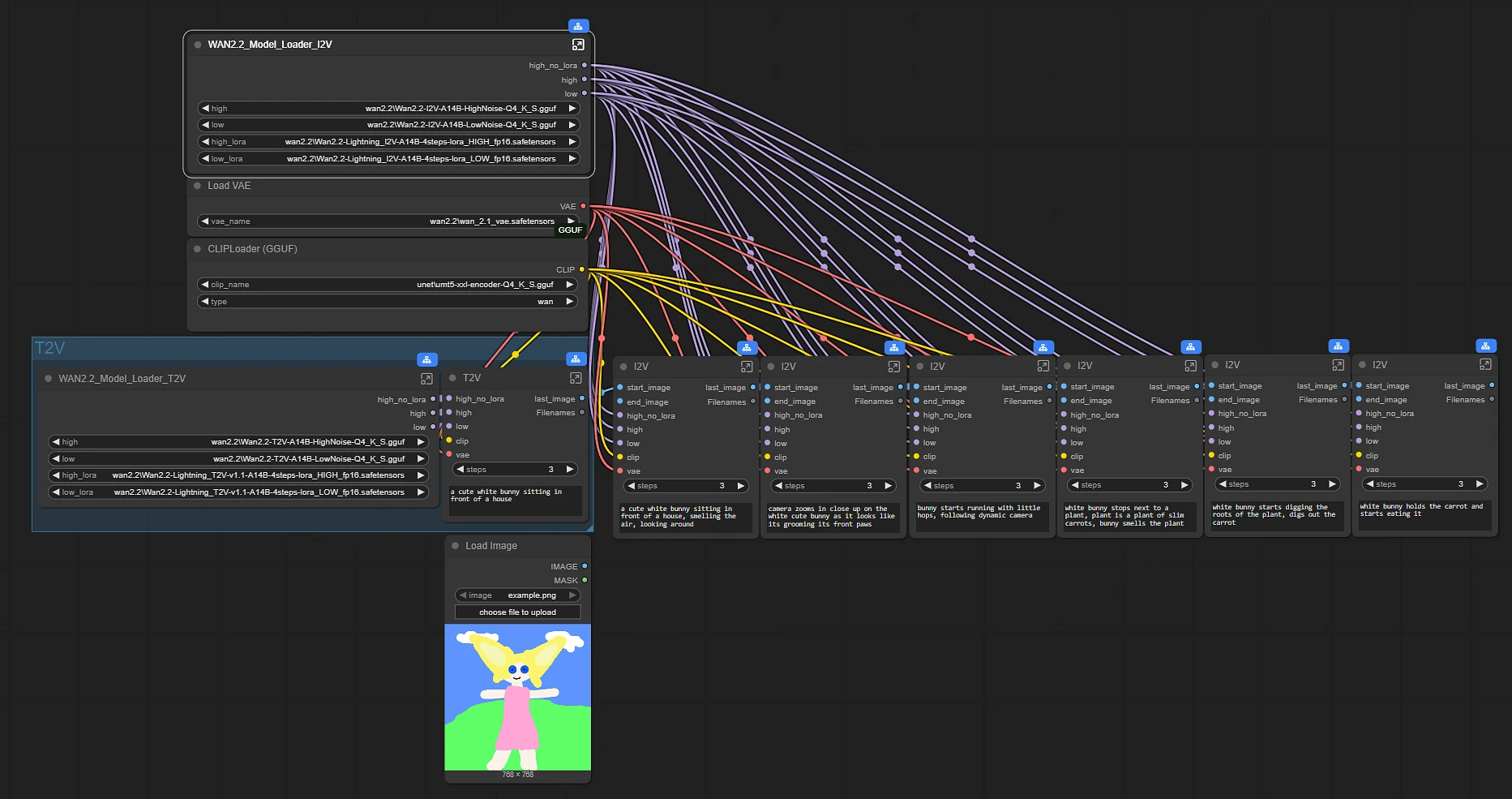

This workflow is simply an experiment with comfyui's new subnodes.

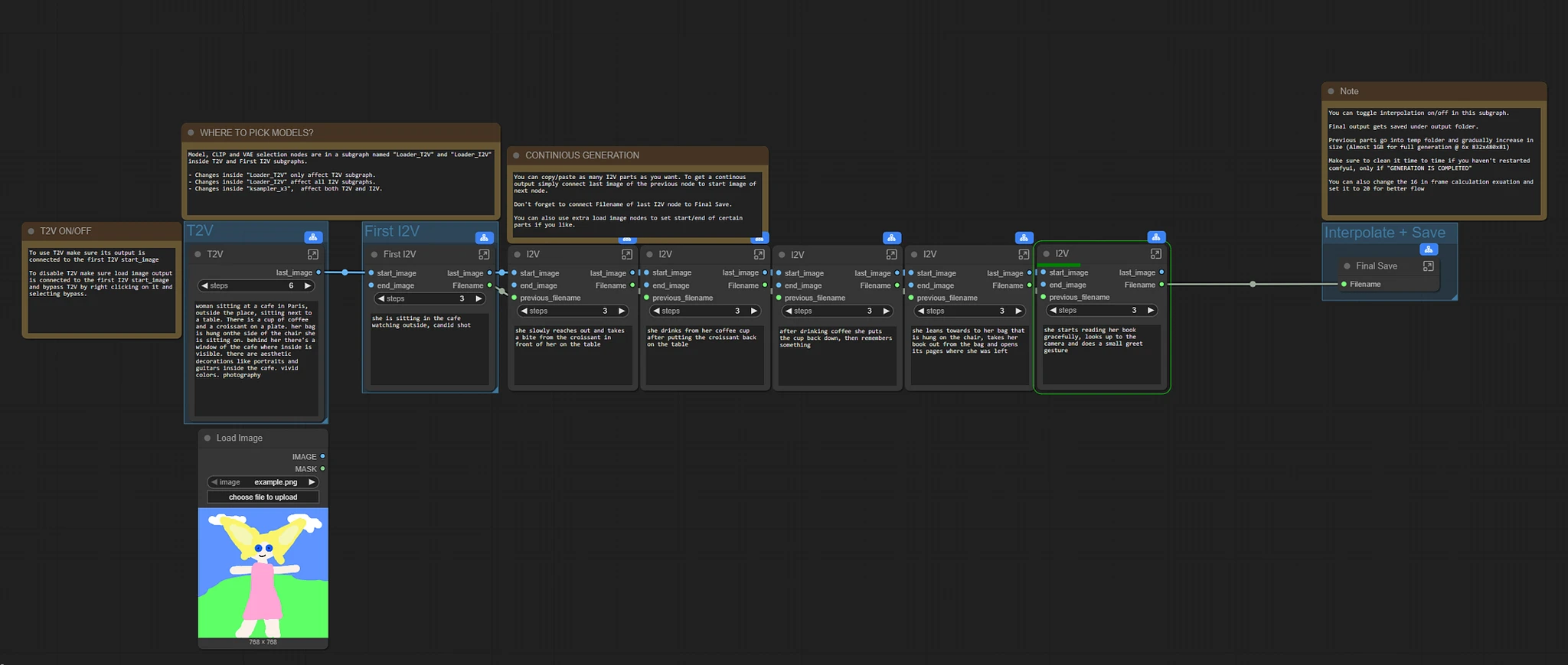

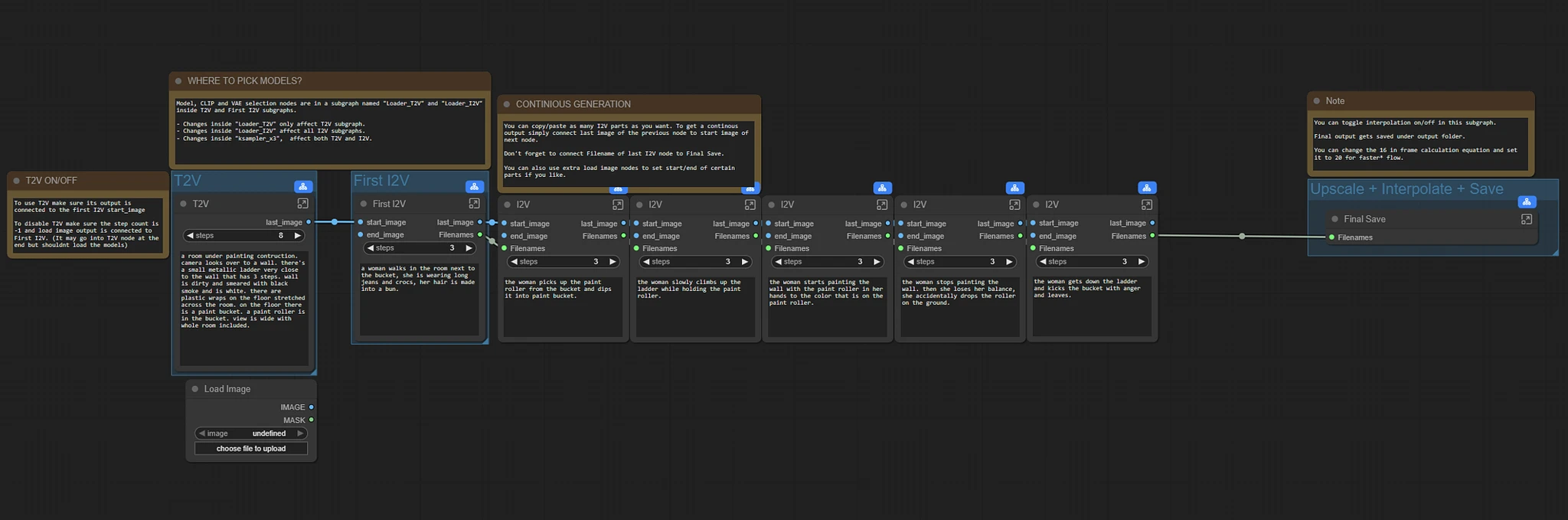

It works by inputing last frame of a video generation as first frame input to next generation. But instead of huge spagetti of nodes you get a single I2V node which shares same ksampler sub nodes and iterates over and over again. You can think of it like framepack but in comfyui and also you can prompt each generation seperately. I kept the negatives common but that could be made dynamic as well.

I did not implement a stitiching option as I'm doing it in a basic video edit software but that could be added as well.

Edit: I forgot to save the output videos in output folders since I decided to not implement merging them later on. So the files end up in comfyui/temp folder. (this folder cleans up next time your run comfyui)

First video in 0.1 is 834x480, 1 3 3 sampled and took 23 minutes to generate. Second one is 624*368, 1 2 2 sampled and took 13 minutes. Second video was also generated in up to date version which has the fix for last_frame showing twice.

Don't forget to update your comfyui frontend since old versions were quite buggy . It might still have some bugs so be aware. Update command for portable;

.\python_embeded\python.exe -m pip install comfyui_frontend_package --upgradeSampling process is 1 + 3 + 3, where first step is no speed lora applied and others are basic high + low samplings with speed lora applied. Everything is pretty much customizable but remember "KSAMPLER SUBNODE IS USED BY ALL MAIN NODES SO CHANGES APPLY TO ALL OF THEM AT ONCE!". Same goes for I2V_latent subnode if you wanna change the output resolution and length of each part.

To extend the generation simply click on one of the I2V subnodes and copy, hit ctrl + shift + v to paste with all connections then you can connect the last image of your previous node into its start_image input. You can also connect load image to the initial I2V start_image input to bypass T2V generation (dont bypass nodes, it might break subnode).

I couldn't get it to work with native models since it kept crashing on my system so everything is implemented as GGUF quantized models. Feel free to change the process, disable patch sage attention and compile model nodes from the model loader subnode but the speed hit is noticable.

On my 4070ti with sage++ and torch compile enabled T2V + 6x I2V (30s total) took about 20-25 mins.

Hope we get even better workflows from the community in the future :)

Popularity

Info

Latest version (v0.3): 1 File

About this version: v0.3

v0.3

Make sure you are running comfyui frontend 1.26.2, I've been told that the linked subgraphs are in the features of the subgraphs and will eventually be working flawless again. Until then run this command to make sure you are on the right version;

.\python_embeded\python.exe -m pip install comfyui_frontend_package==1.26.2Changes;

optimized save function to save parts but only merge them at the end, takes less than %20 of space that was used in previous version

added very subtle temporal motion blur to last frame, transitions look smoother when not much is going on but sharp turns can still happen so it works sometimes and other times you can see the motion change direction. Values could be tweaked further later on

added basic lora example in each part that only affects them

added basic upscale using model support to final save node

removed mp4 converter since most of editing software will support the type, you can use vlc to view part files

changed default resolution to be 480x832 vertical

3 Versions

Go ahead and upload yours!