probably not the final version

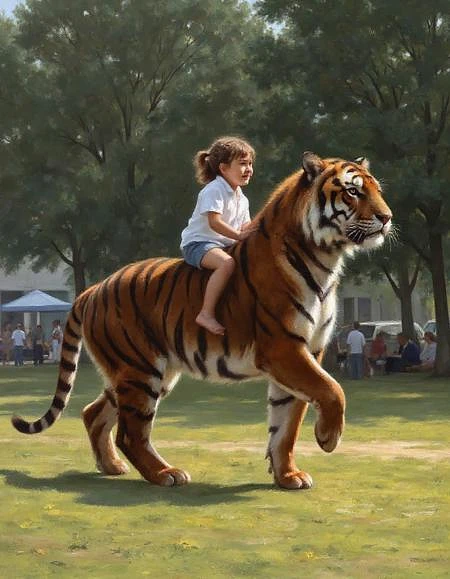

Trained using this website's tools - thank you CivitAI! Works great!

Generation preferences: My preferred sampler in ComfyUI is ipndm (for all Flux, not just this LoRA) with scheduler sgm_uniform or beta. With this LoRA you can get away with fewer steps than for photographic output; 18 or so. Stick with 1024px dimensions - smaller images turn out noisy and pretty ugly. My current FluxGuidance is 2.8, but I need to explore that further.

Flux is an interesting model for fine art style training. It has really, really strong photographic tendencies. And as this LoRA model is a fairly realist (not photorealist, but definitely not too abstract) style, output can sometimes be outright a photo, or too heavily influenced by Flux's photos - for instance in introducing something like bokeh where this traditional style of painting should not render such a thing.

I'll keep trying to iterate on it. A slow process given the need to rely on this site for training and an underpowered local machine for image-generation.

Besides the leaning-toward-photography issue, the output from this LoRA also has a tendency to generating what looks like jpeg compression artifacts. Flux seems to get confused by the oil painting surface textures. In a really closeup shot, such textures are amazing. But pull back a little bit and it can get confused.

Popularity

Info

Version v1.0: 1 File

About this version: v1.0

initial version

1 Version

Go ahead and upload yours!