Flux1-DedistilledMixTuned-V1

v3.0-Krea fp8洗净蒸馏油腻,回归模型本真。

Wash away the distillation and return to the original basic.

如果您使用后觉得模型不错,请多多返图,谢谢!

If you feel the model is good for you, please post the image here, thanks a lot!

===========================================================================

可能是目前基于 Flux.1 Schnell 调制的各种模型中,快速出图(4-8步),遵循原版 Flux Schnell 构图风格,提示词还原能力强,且在出图质量、出图细节、回归真实和风格多样化方面取得最佳平衡的开源可商用 Schnell 基础模型。

Only 4 step, The Model may achieve to the best balance in terms of image quality, details, reality, and style diversity compare with other tuned of Flux.1 Schnell. and have a good ability of prompt following, good of the original Flux model style following.

Based on FLUX.1-schnell, Merge of LibreFLUX, finetuned by ComfyUI, Block_Patcher_ComfyUI, ComfyUI_essentials and other tools. Recommended 4-8 steps, usually step 4 is OK. Greatly improved quality and reality compare to other Flux.1 Schnell model.

===========================================================================

可能是目前快速出图(10步以内)的 Flux 微调模型中,遵循原版 Flux.1 Dev 风格,提示词还原能力强、出图质量最好、出图细节超越 Flux.1 Dev 模型,最接近 Flux.1 Pro 的基础模型。

May be the Best Quality Step 6-10 Model, In some details, it surpasses the Flux.1 Dev model and approaches the Flux.1 Pro model. and have good ability of prompt following, good of the original Flux.1 Dev style following.

Also on Huggingface.co

Based on Flux-Fusion-V2, Merge of flux-dev-de-distill, finetuned by ComfyUI, Block_Patcher_ComfyUI, ComfyUI_essentials and other tools. Recommended 6-10 steps. Greatly improved quality compared to other Flux.1 model.

GGUF Q8_0 / Q5_1 /Q4_1 量化版本模型文件,经过测试,已同步提供,将不会再提供别的量化版本,如有需要,朋友们可根据下面提示信息,自己下载 fp8 后进行量化。

GGUF Q8_0 / Q5_1 /Q4_1 quantized model file, had tested, and uploaded the same time, over-quantization will lose the advantages of this high-speed and high-precision model, so no other quantization will be provided, you can download the FP8 model file and quantizate it according to the following tips.

===========================================================================

Recommend:

UNET versions (Model only) need Text Encoders and VAE, I recommend use below CLIP and Text Encoder model, will get better prompt guidance:

Text Encoders: https://huggingface.co/silveroxides/CLIP-Collection/blob/main/t5xxl_flan_latest-fp8_e4m3fn.safetensors

VAE: https://huggingface.co/black-forest-labs/FLUX.1-schnell/tree/main/vae

GGUF Version: you need install GGUF model support nodes, https://github.com/city96/ComfyUI-GGUF

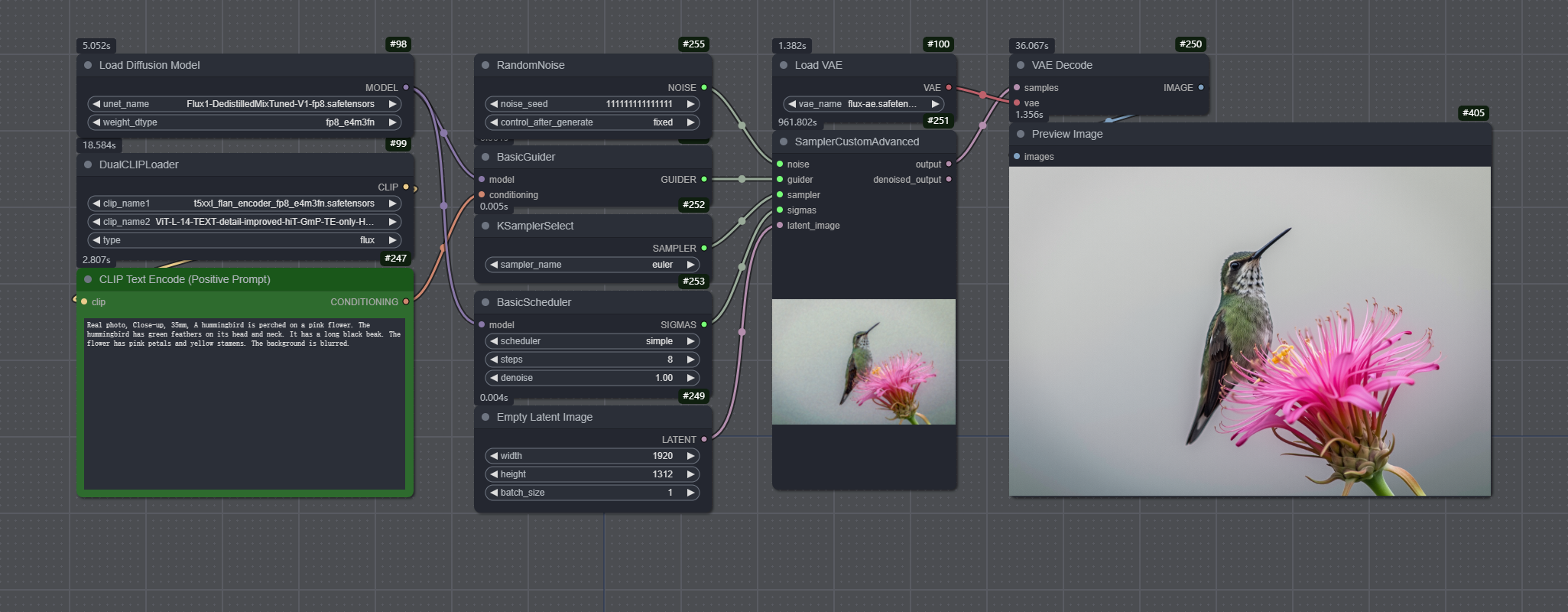

Simple workflow: a very simple workflow as below, needn't any other comfy custom nodes(For GGUF version, please use UNET Loader(GGUF) node of city96's):

Thanks for:

https://huggingface.co/black-forest-labs/FLUX.1-dev, A very good open source T2I model. under the FLUX.1 [dev] Non-Commercial License.

https://huggingface.co/black-forest-labs/FLUX.1-schnell, A very good open source T2I model, under the apache-2.0 licence.

https://huggingface.co/Anibaaal, Flux-Fusion is a very good mix and tuned model.

https://huggingface.co/nyanko7, Flux-dev-de-distill is a great experimental project! thanks for the inference.py scripts.

https://huggingface.co/jimmycarter/LibreFLUX, A free, de-distilled FLUX model, is an Apache 2.0 version of FLUX.1-schnell.

https://huggingface.co/MonsterMMORPG, Furkan share a lot of Flux.1 model testing and tuning courses, some special test for the de-distill model.

https://github.com/cubiq/Block_Patcher_ComfyUI, cubiq's Flux blocks patcher sampler let me do a lot of test to know how the Flux.1 block parameter value change the image gerentrating. His ComfyUI_essentials have a FluxBlocksBuster node, let me can adjust the blocks value easy. that is a great work!

https://huggingface.co/twodgirl, Share the model quantization script and the test dataset.

https://huggingface.co/John6666, Share the model convert script and the model collections.

https://github.com/city96/ComfyUI-GGUF, Native support GGUF Quantization Model.

https://github.com/leejet/stable-diffusion.cpp, Provider pure C/C++ GGUF model convert scripts.

Attn: For easy convert to GGUF Q5/Q4, you can use https://github.com/ruSauron/to-gguf-bat script, download it and put to the same directory with sd.exe file, then just pull my fp8.safetensors model file to bat file in exploer, will pop a CMD windows, and follow the menu to conver the one you want.

LICENSE

The weights fall under the FLUX.1 [dev] Non-Commercial License.

What is Flux1-DedistilledMixTuned-V1?

Flux1-DedistilledMixTuned-V1 is a highly specialized Image generation AI Model of type Safetensors / Checkpoint AI Model created by AI community user wikeeyang. Derived from the powerful Stable Diffusion (Flux.1 D) model, Flux1-DedistilledMixTuned-V1 has undergone an extensive fine-tuning process, leveraging the power of a dataset consisting of images generated by other AI models or user-contributed data. This fine-tuning process ensures that Flux1-DedistilledMixTuned-V1 is capable of generating images that are highly relevant to the specific use-cases it was designed for, such as photorealistic, base model.

With a rating of 0 and over 0 ratings, Flux1-DedistilledMixTuned-V1 is a popular choice among users for generating high-quality images from text prompts.

Can I download Flux1-DedistilledMixTuned-V1?

Yes! You can download the latest version of Flux1-DedistilledMixTuned-V1 from here.

How to use Flux1-DedistilledMixTuned-V1?

To use Flux1-DedistilledMixTuned-V1, download the model checkpoint file and set up an UI for running Stable Diffusion models (for example, AUTOMATIC1111). Then, provide the model with a detailed text prompt to generate an image. Experiment with different prompts and settings to achieve the desired results. If this sounds a bit complicated, check out our initial guide to Stable Diffusion – it might be of help. And if you really want to dive deep into AI image generation and understand how set up AUTOMATIC1111 to use Safetensors / Checkpoint AI Models like Flux1-DedistilledMixTuned-V1, check out our crash course in AI image generation.

Popularity

Info

Version v3.0-Krea fp8: 1 File

About this version: v3.0-Krea fp8

本 V3.0-Krea 保留了 Krea 模型的主要长处,改善了图像清晰度及与原 Dev 模型 Lora 的兼容性,但 Lora 兼容性方面改善不多,不太理想,这是该版本比较遗憾的地方,请大家慎重下载。

The V3.0-Krea retains the main features of the Krea model, improves image clarity, and enhances compatibility with the Lora, but the Lora compatibility is improved minimal and not ideal, which is a disappointing aspect of this version. Please download cautiously.